UBC medical students had long used an quiz platform developed by New York University (NYU) to test how well they can make diagnoses from reading Electrocardiograms (ECGs). This is a core skill to work in healthcare. But NYU is deprecating their tool. With over 12,000 ECG images in circulation, UBC wanted to develop its own in-house version, tailored to UBC's medical curricula.

Previous Web App on NYU

Click on the image to see more

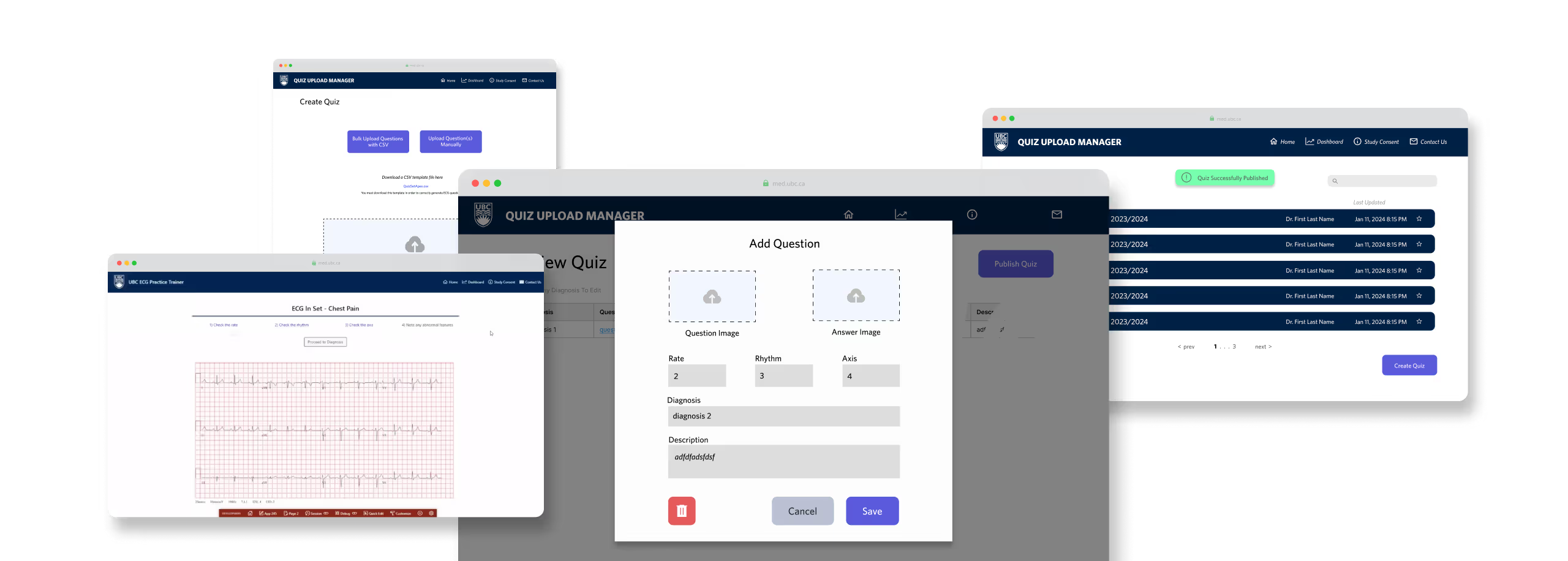

The foundational testing functionality had been built, but the tool wasn’t yet ready for deployment. My role focused on designing the instructor-facing features that would allow faculty to upload, organize, and manage custom quizzes.

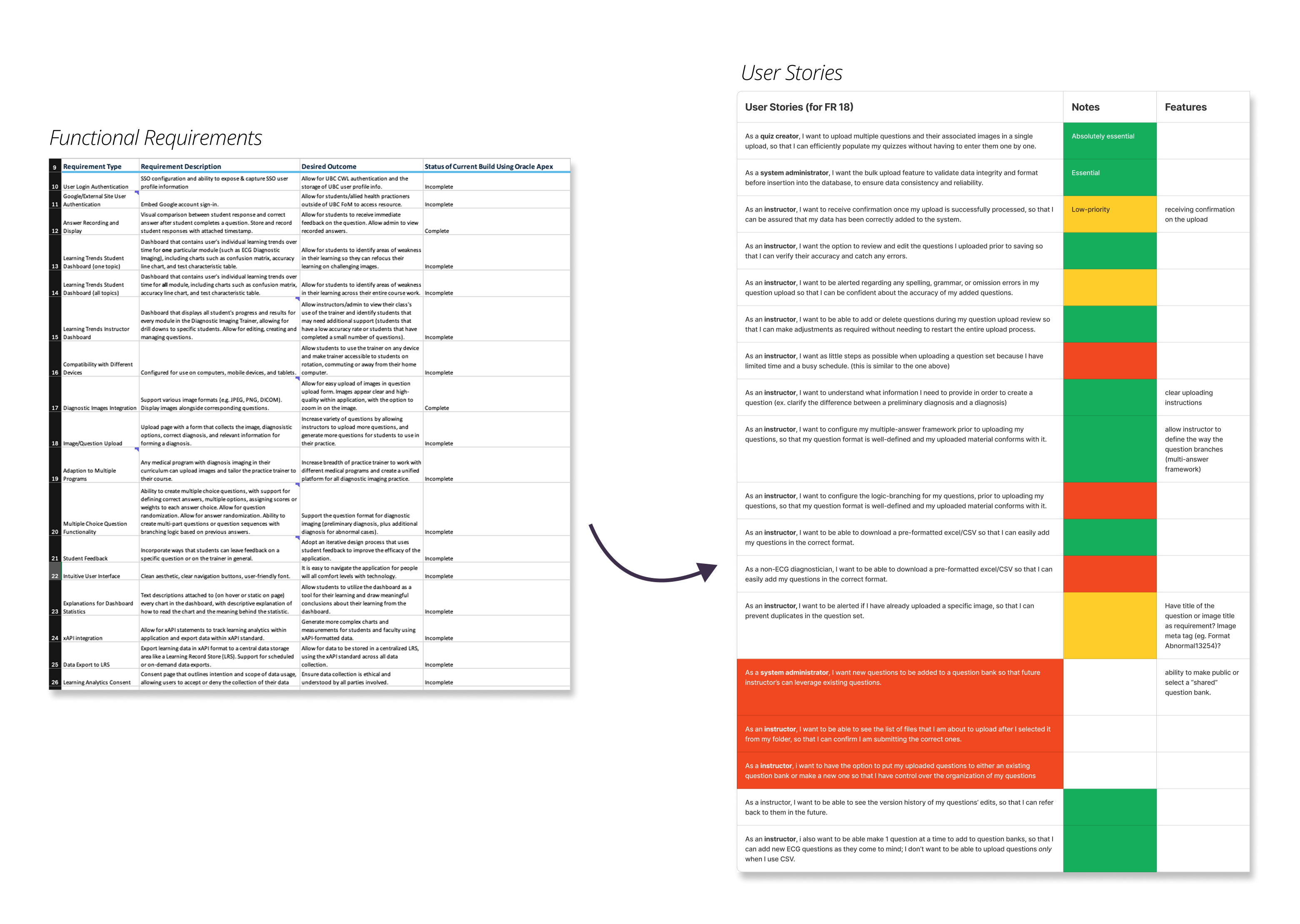

Turning Requirements Into Real Use Cases

✦. ── Pre-Design Analysis

We received a list of functional requirements from UBC Medicine faculty. Most of the requirements had not been met in the current version of the virtual tutor. To clarify priorities and align design with real needs, I led my team to translate these requirements into user stories based on actual usage contexts. In doing so, we identified three core user groups: Faculty, Admin, and Students. Each user story applies to one of these three demographics.

Synthesizing User Stories

Click on the image to zoom in

After reviewing and prioritizing features, we focused on one critical gap: the ability for course staff to create and manage custom ECG quizzes. Previously, UBC faculty used quizzes hosted on NYU’s platform, developed in collaboration with UBC but maintained only by NYU. Now that the tool was being brought in-house, we needed to design a new workflow that allowed instructors & course staff to generate and tailor quizzes to their specific courses and cohorts; something they had never been able to do before.

Borrowing from Quizlet (Because It Works)

✦. ── Analyzing Competitor Tools in the Private-Sector

We reviewed early user research (conducted prior to my role) on how medical students tend to study outside of using the original ECG platform. The findings showed that Quizlet was the most frequently used tool. Our data shows that it was also widely recognized amongst faculty and staff. So rather than reinventing a new interaction model, I leaned into users’ existing mental models.

By referencing Quizlet’s familiar card-based structure and quiz flow, I was able to mirror an interface that students already knew how to use. This reduces onboarding time and cognitive load. Adopting familiar interaction patterns ensured a smoother transition and improved usability from day one.

Live Notes from Analyzing Private-Sector Tools

Click on the image to zoom in

Intentionally Making the UI “Ugly”

✦. ── High-Fidelity Prototyping

Early user research (conducted before I joined) revealed that while students and faculty didn’t find the NYU interface visually interesting, they valued its simplicity and familiarity. Our data archives, however, showed that our medical students recurrently expressed frustration when learning tools changed abruptly—especially mid-semester—due to the added cognitive load of relearning new systems during already demanding schedules.

Initially, I wanted to redesign the interface to be sleek and modern. But I came to understand that visual overhaul wasn’t the priority, minimizing friction was. No bells. No whistles. Just a tool that helps students resume ECG practice quickly and intuitively—even if that meant keeping the interface “ugly.”

UI Design for Creating ECG Quizzes

Click on the image to zoom in

The team & I made a deliberate decision to keep the UI plain and closely aligned with NYU’s original design. The goal was to reduce disruption and enable a smooth transition to UBC’s in-house version.

I combined NYU’s visual structure with Quizlet-inspired workflows to support familiar interactions. This also aligned with UBC’s existing Oracle APEX system, simplifying backend integration for our developers.

Final Design: Upload → Build → Practice

✦. ── Design Showcase

As my internship was coming to a close, I wrapped up this first iteration with a working upload-and-quiz builder flow. These designs were handed off to the next UX team to expand student-facing features.

Finalized Userflow For Making ECG Quizzes

Click on the image to see more

Good-Looking Doesn’t Mean Good for Users

✦. ── What I Learned

Pretty doesn’t mean usable

The clean and polished aesthetics typical of the private sector means nothing in a learning tool if it gets in the way of the actual task. At the end of the day, a good-looking prototype on Figma doesn’t necessarily mean good for our users.

Familiarity reduces friction

We don’t always need to “innovate,” especially on visual design. We only should do so if it helps the user. We need to first consider users’ existing mental models on interactions in order to reduce friction!

Not Every Usability Issue Can Be Solved in Figma

Some usability issues emerge only at the implementation stage. For example, Oracle APEX required uniquely named image files to avoid overwriting data—something most users wouldn’t think about. This limitation wasn’t fixable in the UI alone. I worked with developers to explore backend solutions, reinforcing that good UX often requires cross-functional thinking beyond just design mockups.